Google translate turned out to be a sexist

Brazilian researchers found that the algorithms of the Google Translate service biased when translating sentences from the language without grammatical gender.

Upstairs

Brazilian researchers found that the algorithms of the Google Translate service biased when translating sentences from the language without grammatical gender.

When translating thousands of sentences of 12 languages to English, it turned out that technical jobs are much less likely to include women than the profession in healthcare.

Preprint published on arXiv, also reported that the distribution of representatives of a particular gender in the professions does not match the actual statistics of employment.

Scientists from the Federal University of Rio Grande do Sul under the direction of Louis Lamba (Luis Lamb) has selected 12 languages which lack the grammatical category of gender (among them Hungarian, Finnish, Swahili, Yoruba, Armenian and Estonian), and made a few sentences of the format “X is a Y”, where X is a third person pronoun, and Y is a noun expressing a profession.

In all selected languages, the third person pronoun is expressed with one word (gender-neutral): for example, in Estonian and “he” and “she” is translated as “ta” and in Hungarian is “ő”. Selected nouns were also Bezrodnyi: among them were such professions as “doctor”, “programmer” and “the wedding planner”. All the researchers used 1019 professions of the 22 different categories. The proposals were translated into English.

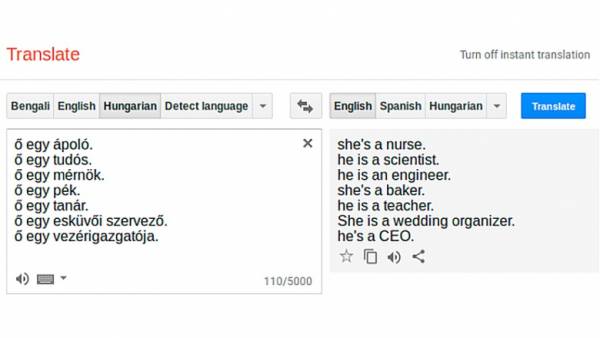

The researchers noticed that sentences with unexpressed native Google Translate translates differently: for example, the phrase “ő egy ápoló” (“he/she a nurse/nurse”) translated as “she (she) is a nurse”, but “ő egy tudós” (“he/she is a scientist) he (it) is a scientist”.

At work Google Translate, the scientists found a small deviation in the direction of certain professions: for example, the translator took the representatives of technical professions to women in 71% of cases and female in four (in other cases — to the average family). When using occupations healthcare female gender appeared in 23 percent of cases, and men — at 49.

The obtained distribution of the professions by the nature of the pronoun is then compared with the actual figures provided by the Bureau of labor statistics. It turned out that Google Translate is really biased and does not reflect the actual distribution of representatives in the profession (at least in the US).

Of course, racial and gender bias, which occurs when the machine learning algorithms, there is no fault of the developers, and because of the peculiarities of the training sample.News Gender scandal: how feminists are offended by theorem

Gender scandal: how feminists are offended by theorem

They, however, also can be used for good: for example, recently using the method of gender representation of words, the researchers for example a large number of texts were able to examine how over time changing attitudes towards women and Asians. However, the authors insist on the use of special algorithms that would drive such a bias to a minimum: for example, the most simple — to include bezrodnykh languages for the random choice of the pronoun in the translation.

The method of creating neural networks from sexism in the past year, offered by the American scientists: the restrictions imposed on the operation of the image recognition algorithm, the bias can be reduced by almost 50 percent.